Decision Trees (Part 2): CART – One is a Regression Tree, the Other is a Classification Tree(Practical Data Analysis 12)

Learn about the CART decision tree algorithm: a powerful tool for classification and regression. Understand Gini index, pruning techniques, and Python implementation.

Welcome to the "Practical Data Analysis" Series

In the last lesson, we talked about decision trees. Based on different methods of measuring information, we can divide decision trees into ID3, C4.5, and CART algorithms.

Today, I'll guide you through the CART algorithm.

CART, which stands for Classification And Regression Tree, is a classification and regression tree algorithm.

ID3 and C4.5 algorithms can generate binary trees or multi-way trees, while CART only supports binary trees. At the same time, the CART decision tree is quite special, as it can be used both as a classification tree and a regression tree.

Now, what you first need to understand is: what is a classification tree, and what is a regression tree.

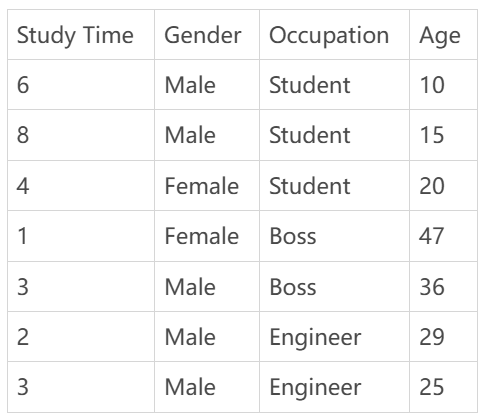

Let me give an example with the following training data. You can see that people with different occupations have different ages and study times.

If I construct a decision tree to classify a person's occupation based on the data, this would be a classification tree, as it selects from several categories.

If the goal is to predict a person’s age based on the given data, that would be a regression tree.

A classification tree handles discrete data, which means data with a limited number of categories. It outputs the category of the sample, while a regression tree predicts continuous values, meaning the data can take any value within a certain range. It outputs a numerical value.