Why Your Python Scrapers Keep Failing

6 proven Python tricks to dodge anti-bot walls and keep your scrapers alive—robots.txt safe, CAPTCHA-proof.

“Top Python Libraries” Publication 400 Subscriptions 20% Discount Offer Link.

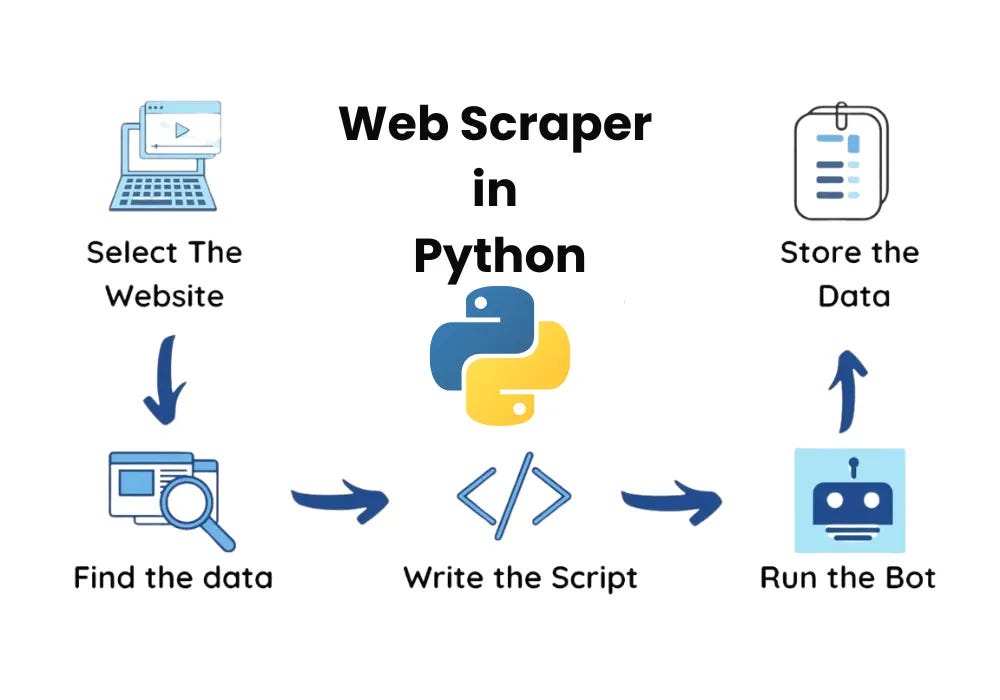

A large portion of people learning Python do it for web scraping—yes, that’s right, collecting web page data. But even Python veterans find it difficult to reliably collect data when writing scrapers, as things constantly break down.

Nowadays, major websites have increasingly sophisticated anti-scraping strategies. They not only identify anomalies in IP access frequency and User-Agent request headers, but also set up various barriers by analyzing IP addresses, browser fingerprints, JS dynamic loading, API reverse engineering, behavior patterns, and more. All kinds of CAPTCHA pop up at any moment, making it extremely difficult to deal with.

How to counter anti-scraping measures is a systematic problem that requires adopting multiple strategies. It also involves legal regulations—you must comply with websites’ robots.txt protocols. Doing some automated testing and collecting small amounts of public data is fine, but you absolutely cannot do things that would disrupt the website.

I believe there are 6 important techniques for using Python scrapers that can help collect data more reliably.